Yoad Tewel

I am a research scientist at NVIDIA Research and a Computer Science PhD candidate at Tel-Aviv University, working in the Deep Learning Lab under the supervision of Prof. Lior Wolf.

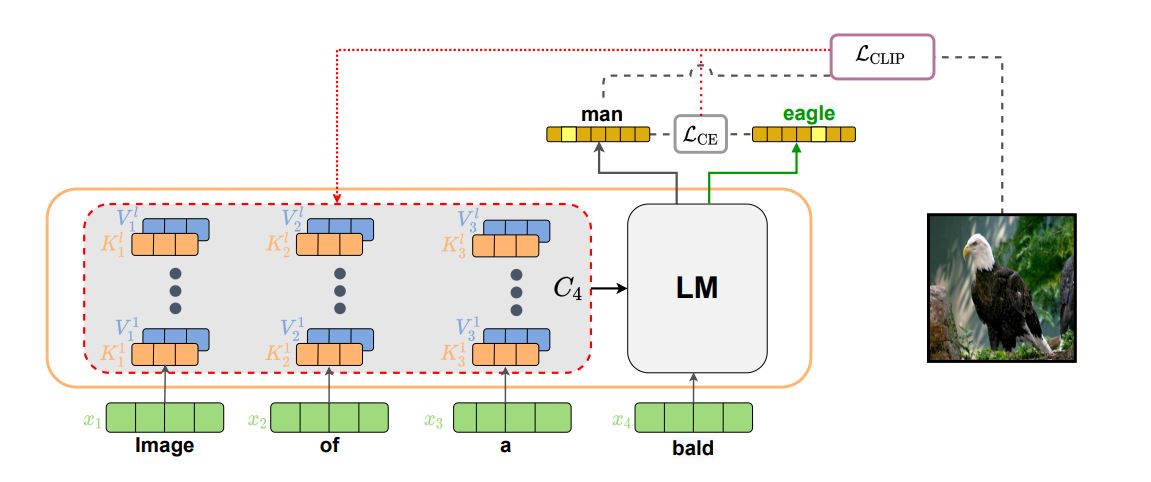

My research focuses on the intersection of computer vision, natural language processing, and machine learning. In particular, I am exploring ways to leverage text-and-image foundation models for solving zero-shot tasks.